OpenPTrack

OpenPTrack is an open-source person tracking software developed at REMAP. From 2018-2020 I contributed various core functionalities:

Sensor Integration

I added support for Intel RealSense and Sterolab Zed depth imagers in OpenPTrack, as well as the new Kinect Azure. This includes containerizing the project to run all imagers from a single Docker image, system tests & configuration for optimal imager performance, and documentation.

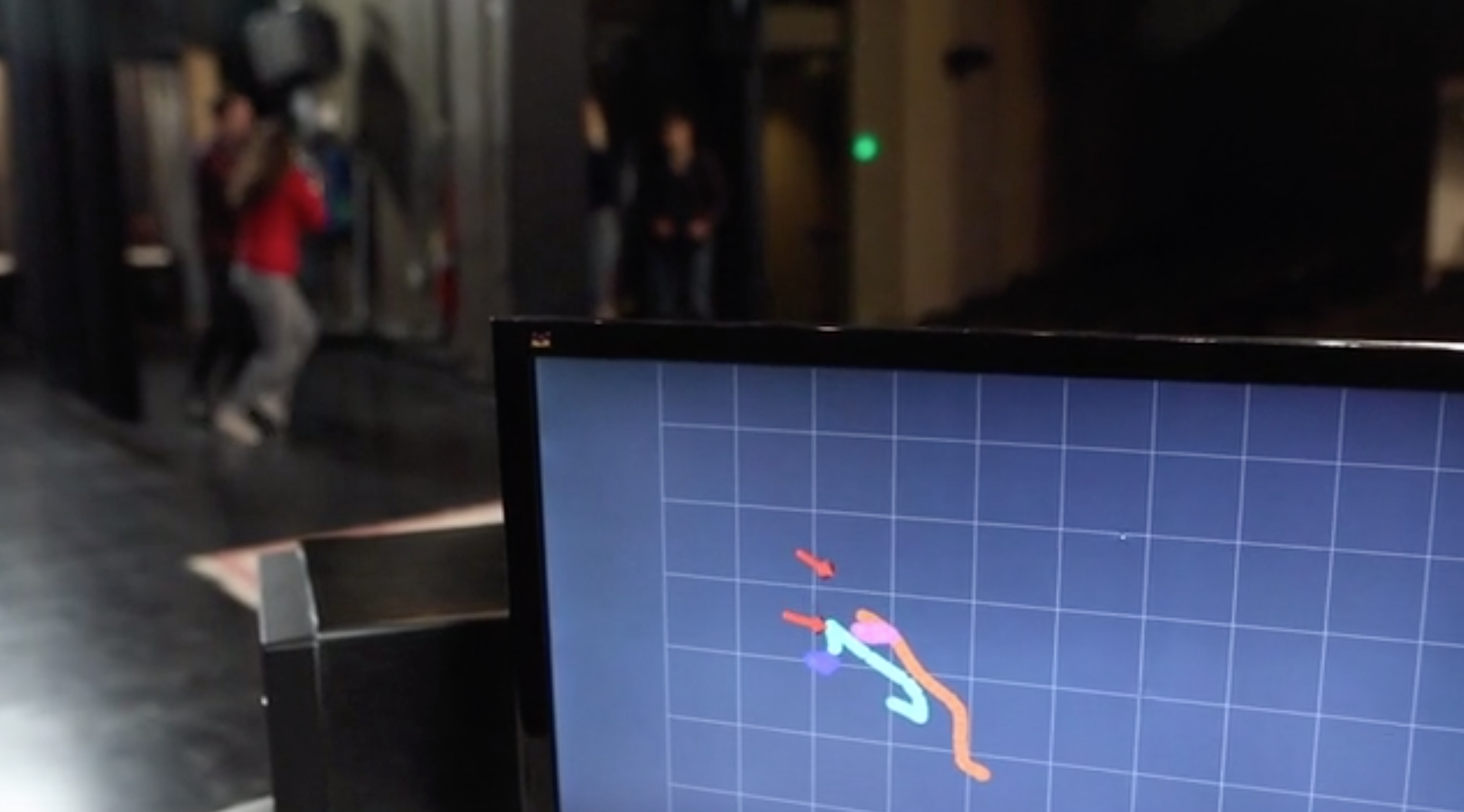

Visualizations

In order to show all of OpenPTrack’s data more effectively (spatial position, pose, objects, facial recognition), I developed a Unity-based visualization app. This also serves as a step to integrate OpenPTrack with mobile AR. C# consumers receive raw tracking data from ROS, and experience-specific effects can be added easily in Unity. The Unity project is available on github.

Experimental Kalman Filter Module

OpenPTrack provides various ‘centroids’, or person positions, including: a HOG person detection algorithm, YOLO object tracking, and pose recognition. Other data, such as phone positions in mobile AR scenarios, can also provide spatial location information depending on the context. I worked on a Kalman Filter module for sensor fusion to provide an estimation based on all of these detections. The resulting detection provides more accurate and persistent (i.e. less ‘dropped tracks’). Initial tests have shown the Kalman Filtered position data to be more robust than any one tracking solution; a production-ready module is in the works!

Installations

I maintained active OpenPTrack installations in UCLA’s Little Theater and REMAP offices, as well as at our collaborators’ labs, for use in embodied learning for children and immersive performance.